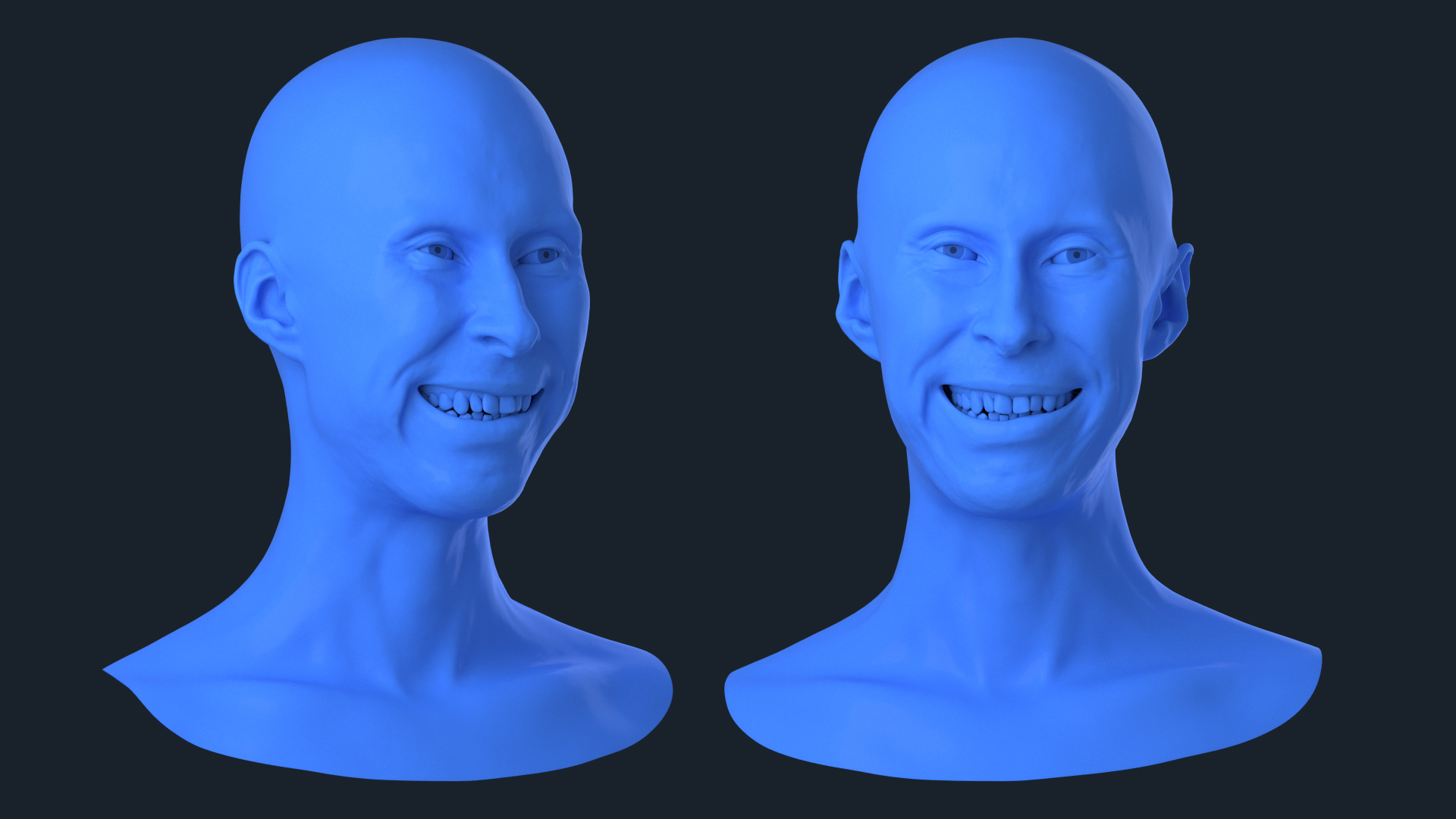

Art by Smir Mir

What's new in Wrap4D

4D Capture Using Two Cameras on a Helmet

The new Image Facial Wrapping tool provides a high-fidelity facial animation that was previously only achievable with seated 4D capture rigs.

Now you can process 4D sequences using just two cameras mounted on a helmet. It allows capturing the facial performance at the same time as the body mocap.

New Nodes and Tools in Wrap4D

Stereo Camera Calibration

Calibrate two cameras on the HMC using a checkerboard sequence

Image Facial Wrapping

Fit the mesh to every frame of an HMC sequence by optimizing the shape, the texture, and the lighting until they match the images from the cameras

Guidable Head Stabilization

Stabilize the head on an HMC sequence by using a set of pre-stabilized FACS expressions

Guidable Replace

Predict the shape of the neck, the ears, and the sides of the head by analyzing the front part of the face

Guidable Texture

Generate a dynamic color texture for every frame of an HMC sequence based on a set of textured FACS scans from a photogrammetry rig

Guidable Delta Mush

Increase the resolution of a 4D sequence using a set of high-resolution FACS samples

Guidable Mesh

Predict the lower teeth position for every frame using a set of FACS expression examples with pre-aligned teeth

Fit Eye Directions

Find rotations of the eyeballs for every frame of the sequence by matching them pixel-to-pixel to the camera images

Interpolate Eyes

Predict the gaze direction for frames with the eyelids closed by interpolating the rotations between frames where the eyeballs are visible

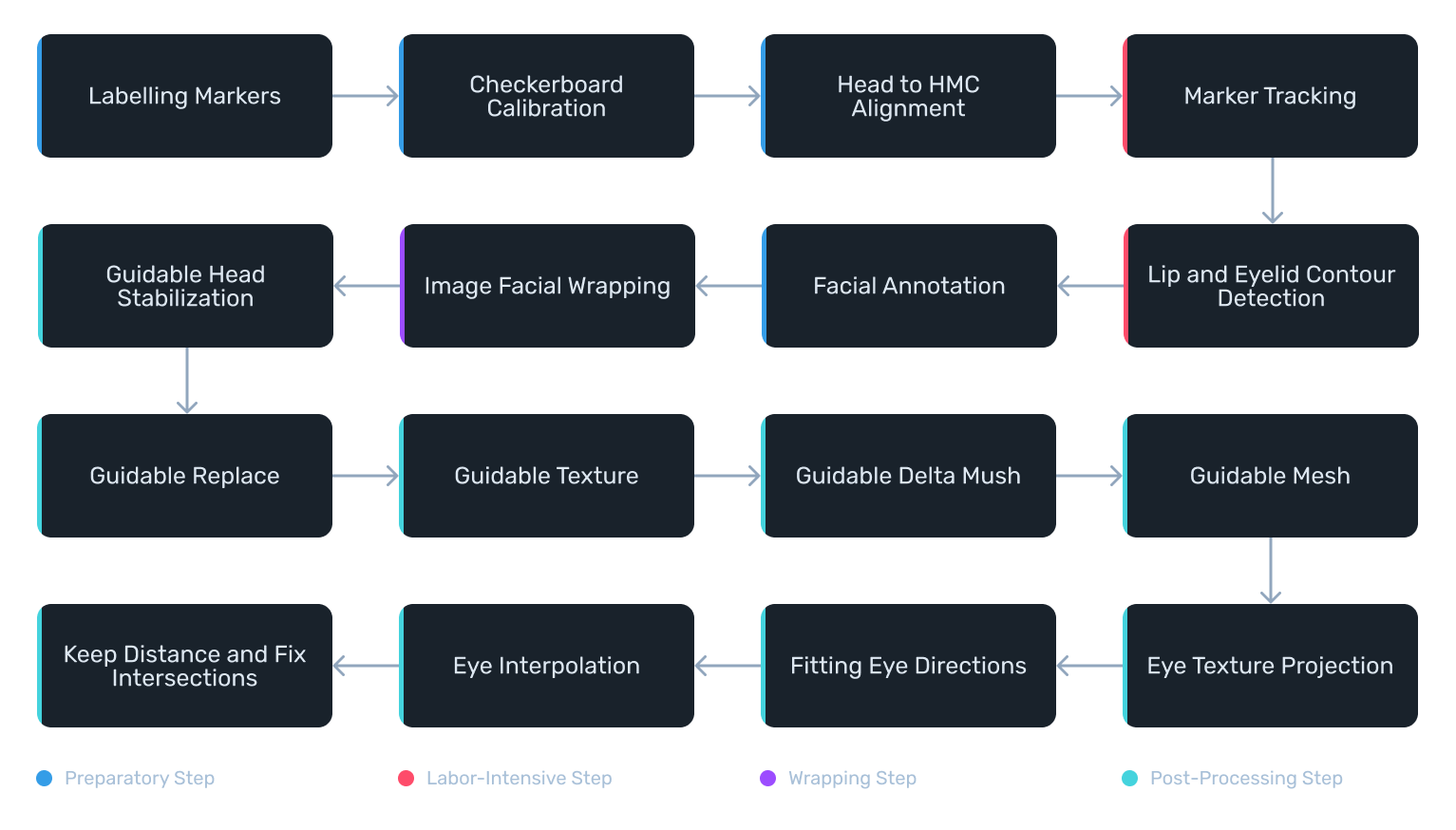

Processing Steps

The processing consists of 16 steps each of which

has a dedicated sample project and a video tutorial

4D HMC Processing Tutorials

Frequently Asked Questions

When should I use this pipeline?

- when you need a high-fidelity facial capture but don’t have access to a 4D capture rig

- when you need to capture 4D sequences simultaniously with the body mocap

- when you need to achieve maximal actor likeness

- when you need to generate training data of the actor’s face for a neural net application

What input data do I need to use it?

- a performance sequence captured on a 2-camera HMC. The actor should have markers on their face

- a checkerboard calibration sequence captured before or after the shooting

- a set of FACS scans captured in a photogrammetry rig. The scans should be wrapped into a consistent topology and stabilized

- a daily scan that has the same set of markers that was used during the capture. The daily scan can be captured using an iPhone, a hand-held camera, or a photogrammetry rig

Does it work with beard?

No. The facial hair will lead to bad geometry fit.

What HMC can I use?

The pipeline is designed to work with any stereo-camera HMC with vertical stereo pair. We highly recommend using strobe lights.

So far it was tested with Technoprops, SDeviation, and Faceform HMC.

Does it use a facial rig?

Can I convert the results into rig controls?

No, not yet. We are working on that. But converting the results into blendshape rig controls would lose a significant amount of animation nuances.

Can I edit the animation result?

No. The output is a point-cache animation that is not editable. We are working on an editable solution.

Can I retarget the animation to a different character?

We are working on a retargeting tool but it’s not yet available. If the actor and the character look alike, you can use a naive approach to transferring the animation deltas.

Can I use this tool with a static 4D rig?

Yes. For now it can work with 2 cameras on an HMC or 2 static machine-vision cameras.

Do I need the facial markers?

Yes. The ImageFacialWrapping node processes each frame independently. For each frame, we need to be able to match a neutral face with any extreme facial expression.

The markers are needed to help find the correspondences where the changes in skin appearance are too big.

How much animation can I process per day?

We normally perform processing with a team of people, dedicated to specific processing steps: tracking, detection, wrapping.

The tracking person tracks up to 30 seconds a day for a single camera view. The detection person produces around 30 seconds of detection a day. The ImageFacialWrapping step takes 5 to 10 minutes per frame so we normally run it on multiple machines overnight.

Having this data, the size of the team, and the number of machines, you can calculate the required timing.

Can I process action/running/fight scenes?

We don’t recommend using this pipeline for extreme scenes. The wrapping process will capture all the secondary dynamic effects of the skin. Such sequences are very hard to stabilize with the current set of tools.

Can I use it for realtime streaming?

No. The processing of 4D sequences is a computationally-intensive offline process.

Do you provide services for processing clients' data?

Yes. Our services department can process your data in a very short time. Please contact us for more details.

What's new in Wrap

New Nodes and Tools in Wrap

16 and 32-bit Depth Image Support

Now you can work with 16 and 32-bit depth images, including OpenEXR format

Multiple Inputs and Outputs in Nodes

Some nodes like Render, Blendshapes, MergeGeom can now work with unlimited number of inputs

PLY Format Support

You can save a lot of disk space by storing sequences of meshes sharing the same topology in a form of binary PLY format that only stores the vertex positions

Stick to Surface

Having a set of meshes in one topology you can automatically convert them into another topology

Fix Intersections

Now you can fix self-intersections on your models completely automatically

Keep Distance

Create a perfect contact between the eyelid and the eyeball geometry with no gaps or intersections

Cartoon Wrapping

Wrap cartoon characters and creatures easier with the new Cartoon Wrapping node

Load Frame from Video

Load frames from video files just like you load images and textures

Merge Geom

Merge multiple meshes into a single geometry

Undistort Image

Fix camera distortion effects on photogrammetry images

Bake Transform

Bake current geometry transforms in vertex positions

Extended Camera Import Options

Import cameras in FBX from Blender, Maya, Reality Capture

What's new in ZWrap

Fast Wrapping

Wrap your models up to 20 times faster

Cartoon Wrapping

Process cartoon characters and creatures easier with the new Cartoon Wrapping tool

Maxon ZBrush® Support

Use ZWrap with the latest versions of Maxon ZBrush®

What's new in Track

New Features in Track

Video Import

Load sequences as a video in mov, mp4, avi, and mkv formats

HMC Neural Net Detector

When working with HMC sequences use a dedicated neural net for better detection of lip and eyelid contours

Improved Contour Fitting

Use the new AGILE method that provides better contour fitting with less training frames

Fast Import/Export for Markers and Contours

Import/export markers and contours faster

Interpolation of Detections

Fix detection problems by interpolating detected contours between selected frames

Small Improvements

- Auto-Save

- Variable Paths

- Editable Shortcuts

- Timeline Snapping and Cycling

- Frame Preview Parameters